Use Case: Event Sourcing for Insurance Claims

Use Case: Event Sourcing for Insurance Claims

Welcome to my new series of blog posts. In the past, I have often discussed specific technologies or technical patterns. However, as I gain more experience in my field, I realize that contextual writing can provide the "so what" behind a technology choice or pattern. In this series, I will introduce specific use cases with clear business requirements and constraints. I will then provide architectural solutions to deliver such applications. Join me for this journey.

The Use Case

Imagine your client is an insurance company, and they operate an Order Management System (OMS). This system serves as the repository for all claims information related to insurance policies. The client's request is for you to build a web portal that generates a report from the OMS and presents it in PDF format. However, there are certain constraints. Due to security and performance considerations, direct access to the OMS database is restricted, except for a potential one-time migration. Instead, you can consume data from a streaming API. The client has chosen AWS Kinesis as the event streaming service. This event stream maintains a history of events for 15 days, and processing all events required to bring a document or order up to date may take up to 5 minutes. It's important to note that the event log is not normalized. Your task is to create a modern web application with two main components: the "Order Exporter" module and the "My Profile" section for employees.

The "Order Exporter" module is designed to generate PDF documents based on configurable templates for specific orders. The requirements for this module include:

- Storing editable templates that can be merged with order information to create HTML pages viewable in web browsers.

- Converting HTML pages to downloadable PDFs through an API endpoint.

- Ensuring the API responds in less than 2 seconds and scales to handle up to 1,000 requests per second.

- Enabling PDFs to be shared via unique URLs that expire after 48 hours.

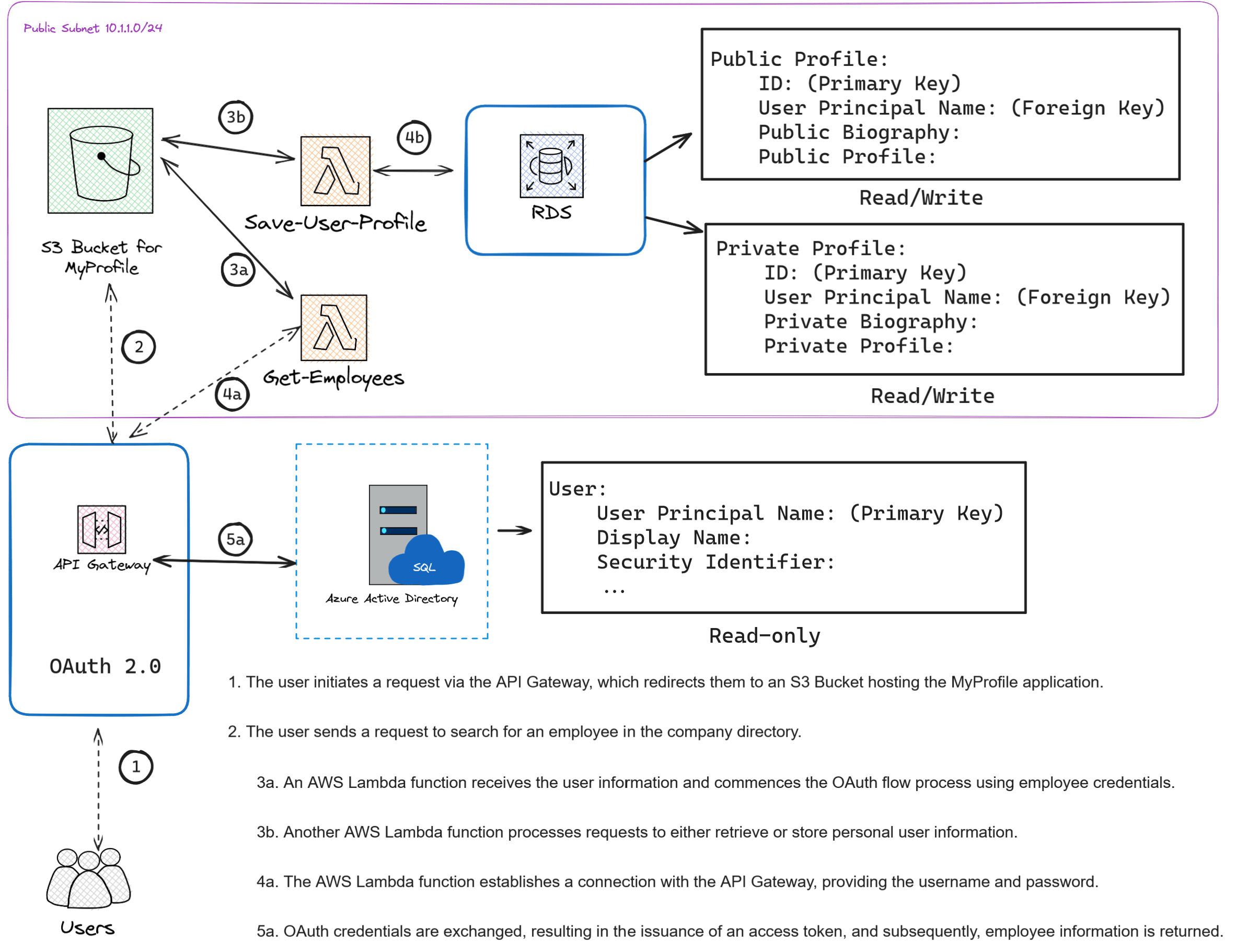

On the other hand, the "My Profile" section serves as an internal directory for employees. It allows them to view and update their information and access publicly available data from other employees. This section draws data from a read-only Identity Management System and permits employees to contribute writeable information while designating fields as public or private.

The proposed solution must address these requirements, with key deliverables including:

- Architecture diagrams and data flow diagrams.

- Selection of tools, services, databases, and programming languages, with justifications for their choices.

- Estimated costs for infrastructure setup and ongoing operations.

- Security considerations to safeguard data.

- A clear deployment strategy.

Guiding Principles

I personally like to begin every architecture assessment with guiding principles. Guiding principles help design solutions using proven patterns that adhere to best practices. Each problem is unique and requires its own set of principles. For this project, clear separation of concerns is paramount due to the sensitive data involved. Additionally, limiting access to data is crucial. The guiding principles for this project are as follows:

- Separation of Concerns

- Least Privilege

- Modularity / Scalability

- Observability

- CQRS (Command and Query Responsibility Segregation)

Outlining Business and Technical Requirements

After reviewing the use case, the next step is to break down the requirements into tangible categories: business requirements and technical requirements. This breakdown serves to explicitly define the client's expectations.

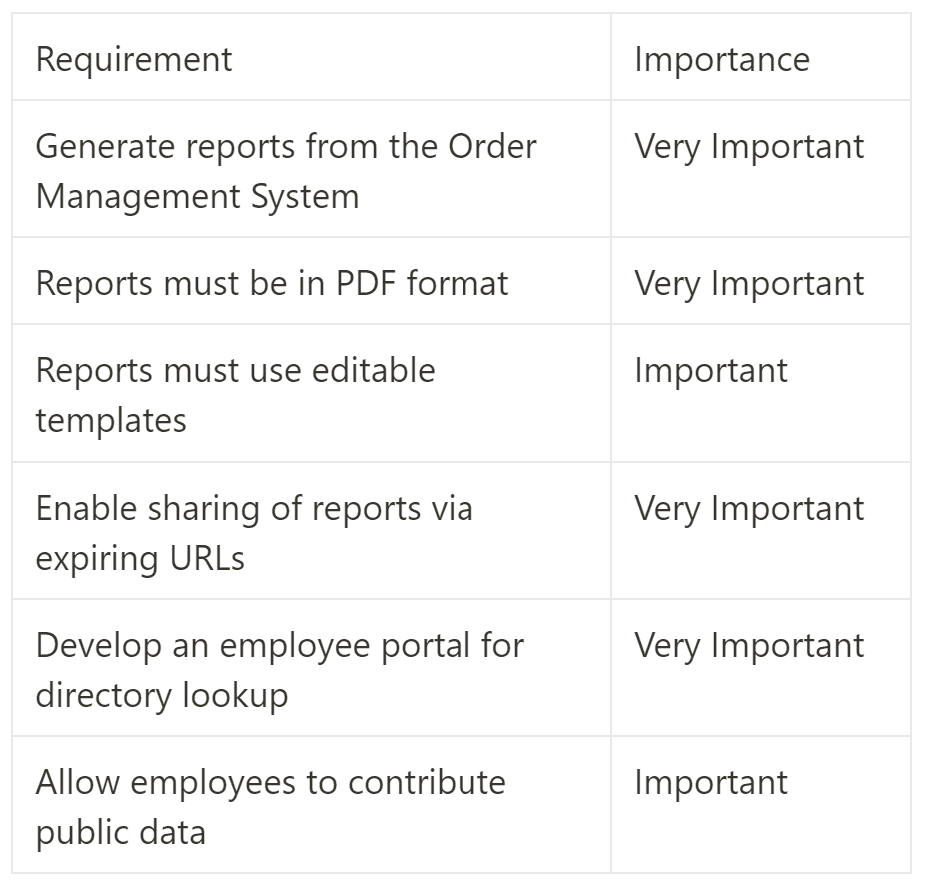

Business Requirements

Technical Requirements

It's worth noting the introduction of "importance" and "complexity" columns within both sets of requirements. This distinction allows prioritization and provides a clear understanding of the client's expectations and the time investment required.

Technical Constraints

Software architecture thrives on constraints, which guide the design process around fixed parameters. Understanding these constraints, whether technical or procedural, is essential to proposing effective solutions.

Key technical constraints include:

- No direct access to the Order Management System (OMS) database.

- Report generation may take up to 5 minutes.

- Event stream retains 15 days of history.

- AWS Kinesis for event streaming and Azure for Identity Management.

- Security access to the reports service is required.

- Need for traceability in report access.

- Data is not normalized.

Addressing Open Questions

Asking questions is a crucial aspect of understanding and addressing the client's needs.

- Why is the data retention period only 15 days if AWS Kinesis supports a longer timeframe?

- What types of public data can employees contribute?

- Is there any password protection for viewing the generated PDFs?

- How many shards are employed in the AWS Kinesis event store?

- Are there specific data residency compliance requirements for data storage?

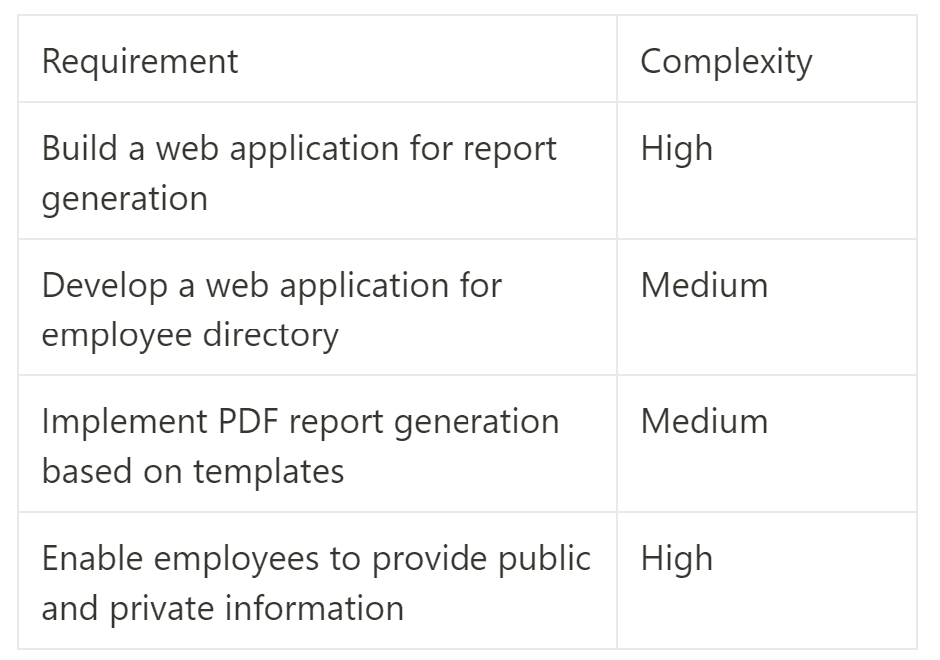

High-Level Architecture Diagram

A high-level architecture in software engineering outlines the foundational structure and organization of a software system or application at a conceptual level. It offers an abstract representation of component interactions and design principles without delving into specific implementation details.

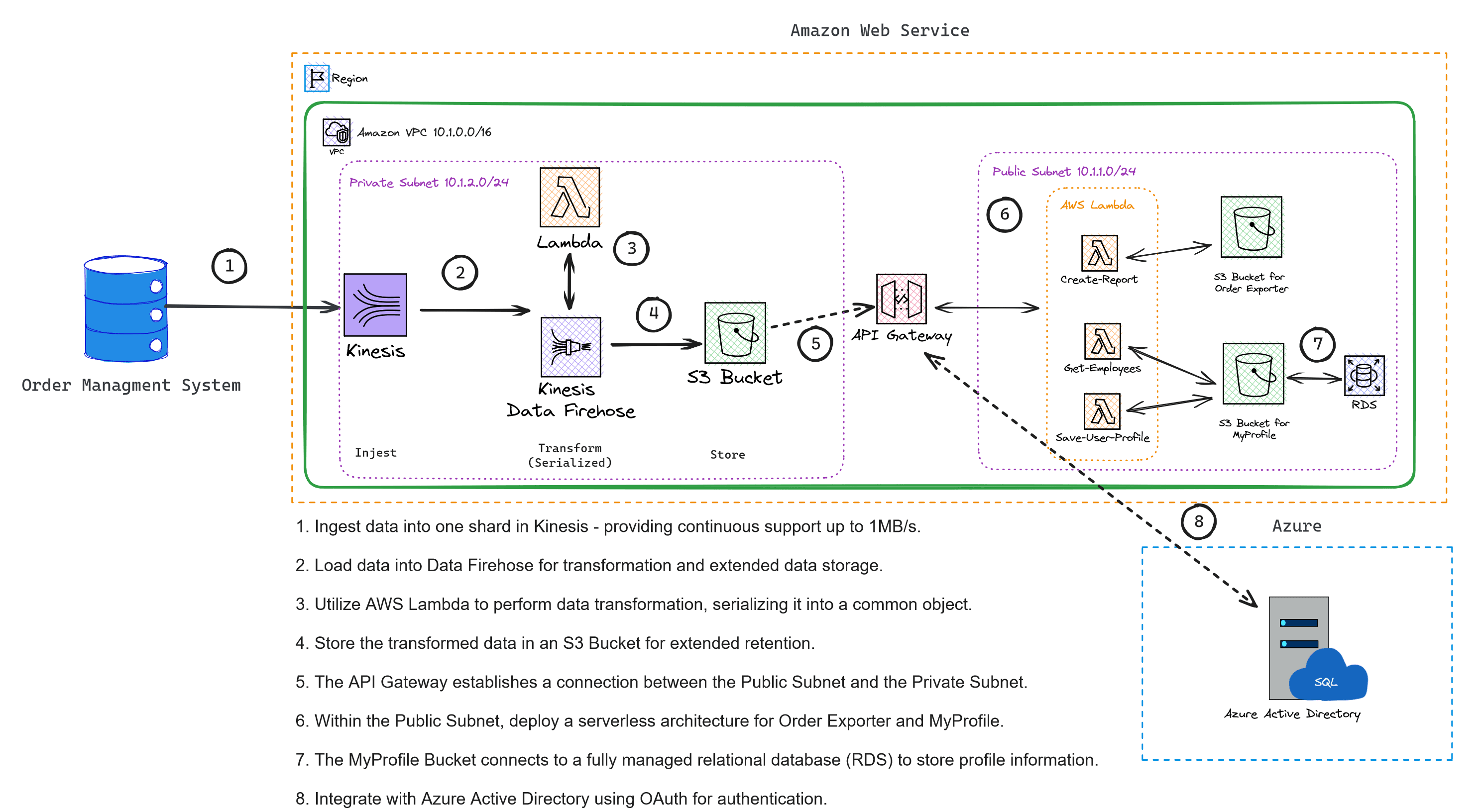

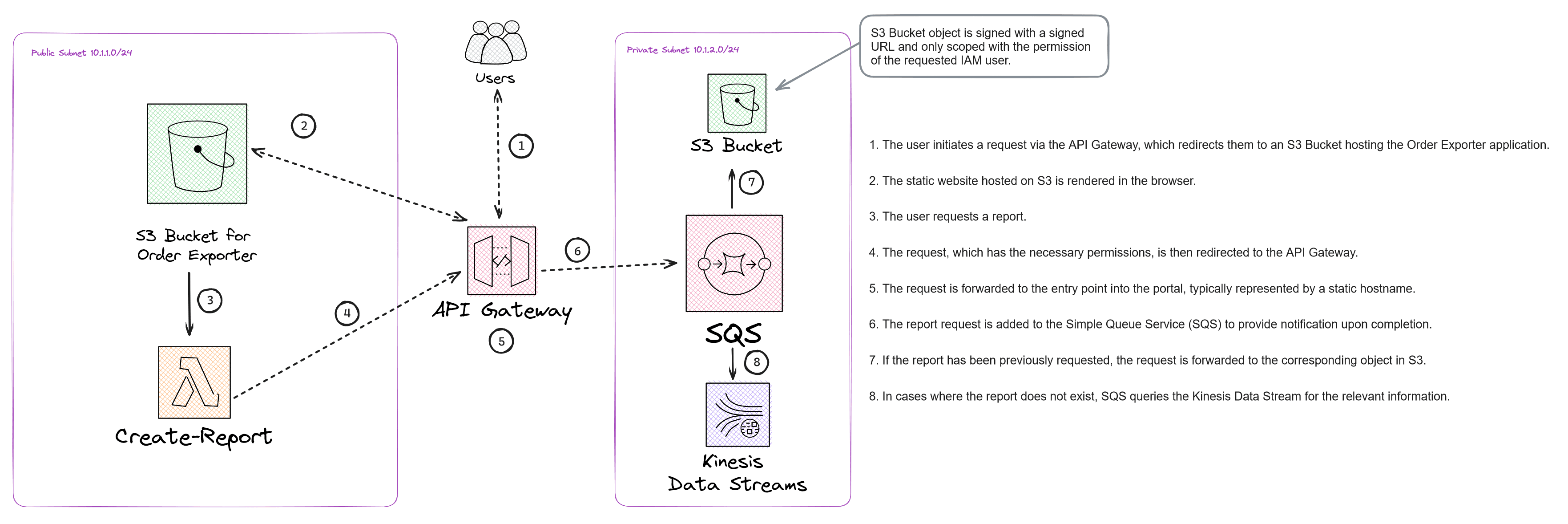

Data flow - Requesting a report

At the core of every architectural solution lies the journey of data. In this exercise, we visualize the lifecycle of a report, from user request to delivery in PDF format. This visualization aids in identifying decision points and logic requirements.

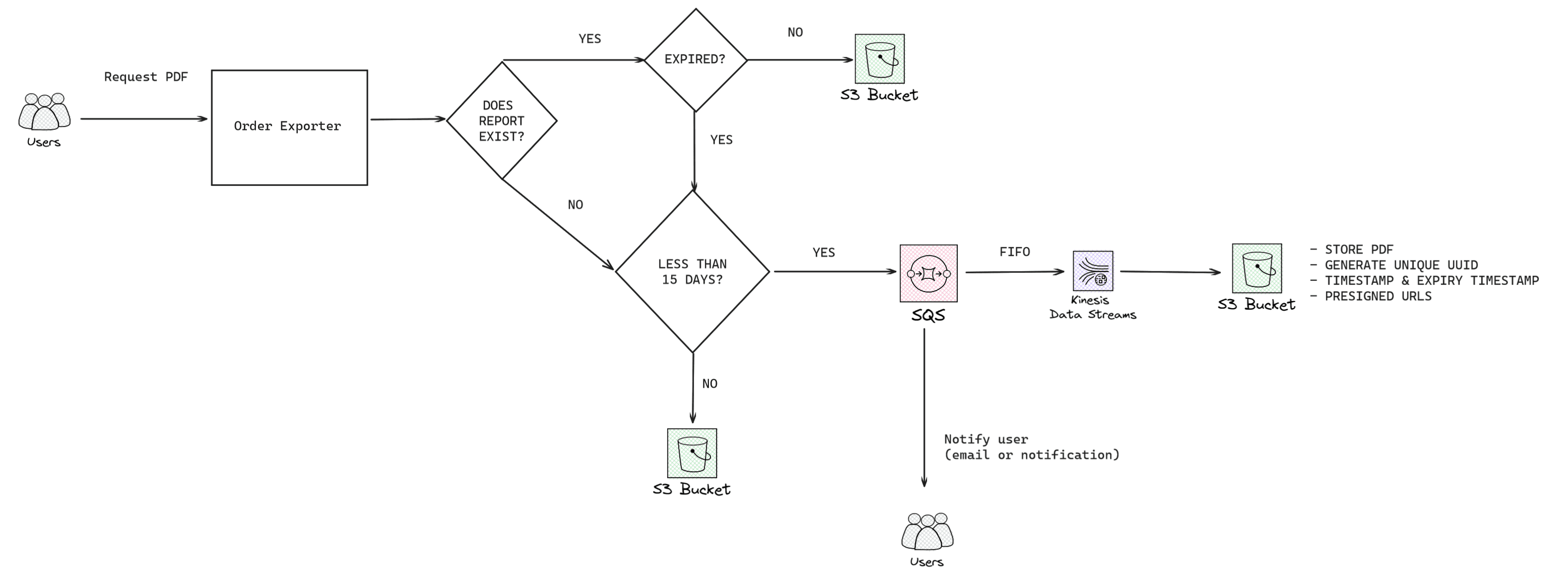

MyProfile Solution Architecture

Zooming into the MyProfile application, we gain a deeper understanding of its solution architecture.

Order Exporter Solution Architecture

Exploring the solution architecture for the Order Exporter module.

Technology Recommendations

For this project, I recommend the following technologies:

- React.JS and React-PDF (https://react-pdf.org/) for creating dynamic PDFs.

- AWS Lambda's utilize Python for data processing and manipulation.

- Fastify for APIs based on OpenAPI Spec.

Deployment Strategies

To deploy this solution effectively, consider the following strategies:

- Green Field Deployment

- Leverage GitOps Process

- Infrastructure is stored in Terraform Modules

- Follow Gitflow Practices

- End to end testing with mock data

- End to end integration testing

Security Recommendations

To ensure data security, adhere to the following recommendations:

- Utilize an API Gateway to validate every request against Active Directory.

- PDF reports must used Pre-Signed URLs and scoped to the internal network of the company.

- Limit the concurrent calls to the streaming API if the requests all older than 15 days.

Cost Recommendation

The cost projection varies based on factors such as load, usage frequency, and data storage volume. Since our solution relies exclusively on cloud technology, costs are influenced by these variables.

| Service | Load | Cost |

|---|---|---|

| AWS Kinesis Firehose | 300,000 records per second | $12,165.45 USD |

| AWS Lambda | 10,000,000 requests per month | $2.00 USD |

| AWS S3 Bucket | 100GB per month | $2.50USD |

| AWS API Gateway | 10,000,000 requests per month | $11.10 USD |

| AWS RDS | db.t2.large | $249.22 USD |

| AWS SQS | 10,000,000 request per month | $3.60 USD |

Monthly Cost: $12,433.87 USD

Yearly: $149,206.44 USD

In Conclusion: Navigating Event Sourcing for Insurance Claims

As we conclude our exploration into the world of event sourcing for insurance claims, we've embarked on a journey that combines technology with real-world challenges. Through this use case, we've delved into the intricacies of building a web portal to generate PDF reports, all while respecting security constraints, streamlining data flow, and orchestrating a seamless experience for both employees and clients.

We've peeled back the layers of architecture, delved into guiding principles, and dissected intricate technical considerations. From the initial spark of a user's request to the moment a polished PDF lands in their hands, we've traced the data's path and learned how to tame complexities.

But our journey doesn't end here. This is just a glimpse into the realm of contextual architectural problem-solving. As we continue our exploration in this blog series, we'll unearth more use cases, tackle new challenges, and illuminate the path to robust, innovative solutions.

Subscribe for More Contextual Architectural Insights

To stay informed about our upcoming posts, where we'll continue to unravel intricate use cases, dissect architecture solutions, and empower you with the knowledge to tackle real-world technical challenges, don't miss out! Subscribe to our blog and join us on this enlightening journey. Simply enter your email below and be the first to receive the latest updates, insightful articles, and invaluable insights. Let's embark on this adventure together, shaping the future of architecture and technology, one contextual solution at a time.

Thank you for joining us on this ride. Until next time!