Using Adobe Target to decide the best machine learning model

Using Adobe Target to decide the best machine learning model

At Telus Digital we turn to machine learning models to help us recommend products, predict user’s behaviour and identify opportunities to personalize the experience for our users. Like most companies, we are investing heavily in machine learning and artificial intelligence to help us drive business goals.

As we approach maturity in our data science practice we need a way to validate the performance of our models against each other and one way to achieve this is running an A/B test.

The Opportunity

We have been extremely successful with personalization and A/B testing, mainly due to our ability to integrate with Adobe Target server-side. Since the development of our Personalization API, our personalization and A/B testing practice has skyrocketed. We can deliver fully personalized content to our customers in less than 200ms.

Historically, we have only used the Adobe Target to test content, since the Personalization API is integrated with Contentful (our CMS tool). However, an interesting opportunity was presented to my team, was it possible to A/B test different models instead of content?

The Recommendation Engine

The Data Science team within Telus Digital built a recommendation engine to recommend different TV channels to our customers.

The recommendation engine has three different models to recommend the best channels:

- Location-based: Based on a user’s location it recommends what another user’s around them have watched.

- Random based: Randomly recommends channels to users

- Popular: Recommends the most popular channels to the user

As the team deployed the Recommendation Engine into production they needed a way to test which of the three models performed the best. At this time, we had our eureka moment. We would utilize Adobe Target and the Personalization API to run A/B tests with the different models.

The Solution

When we started to brainstorm on what the architecture would look like, we set out to outline two guiding principles.

- Our solution would favour a decoupled architecture; allowing each system to know the least amount of information about each other.

- Our solution had to be reusable, allowing for other services to consume it.

The first step was to write our A/B test hypothesis:

If the customer sees a recommended channel, then they add it to their cart because of an appropriate recommendation we will confirm the recommendation engine recommended correctly.

Stating our conversion goal (adding channel to the shopping cart), allows us to have a measurable metric to test against.

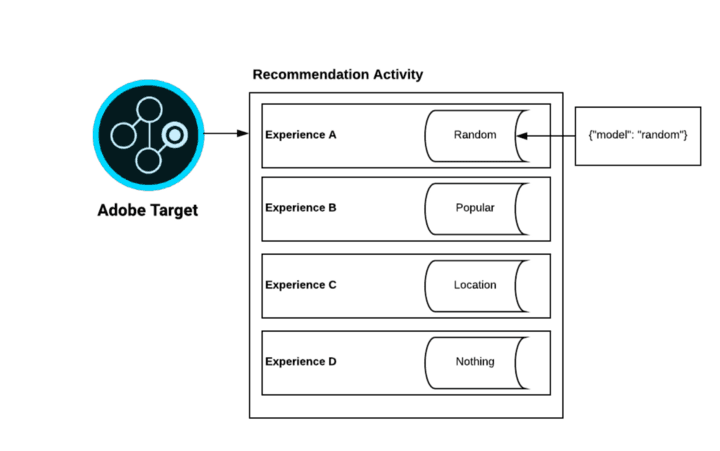

Once we set our hypothesis and conversion goal, we created an Activity in Adobe Target with four Experiences, each one of them corresponding to the different model plus one extra one with no recommendation (which would serve as our control). In each Experience, rather than storing the content to show our users, we stored a JSON payload similar to {"model": "random"}.

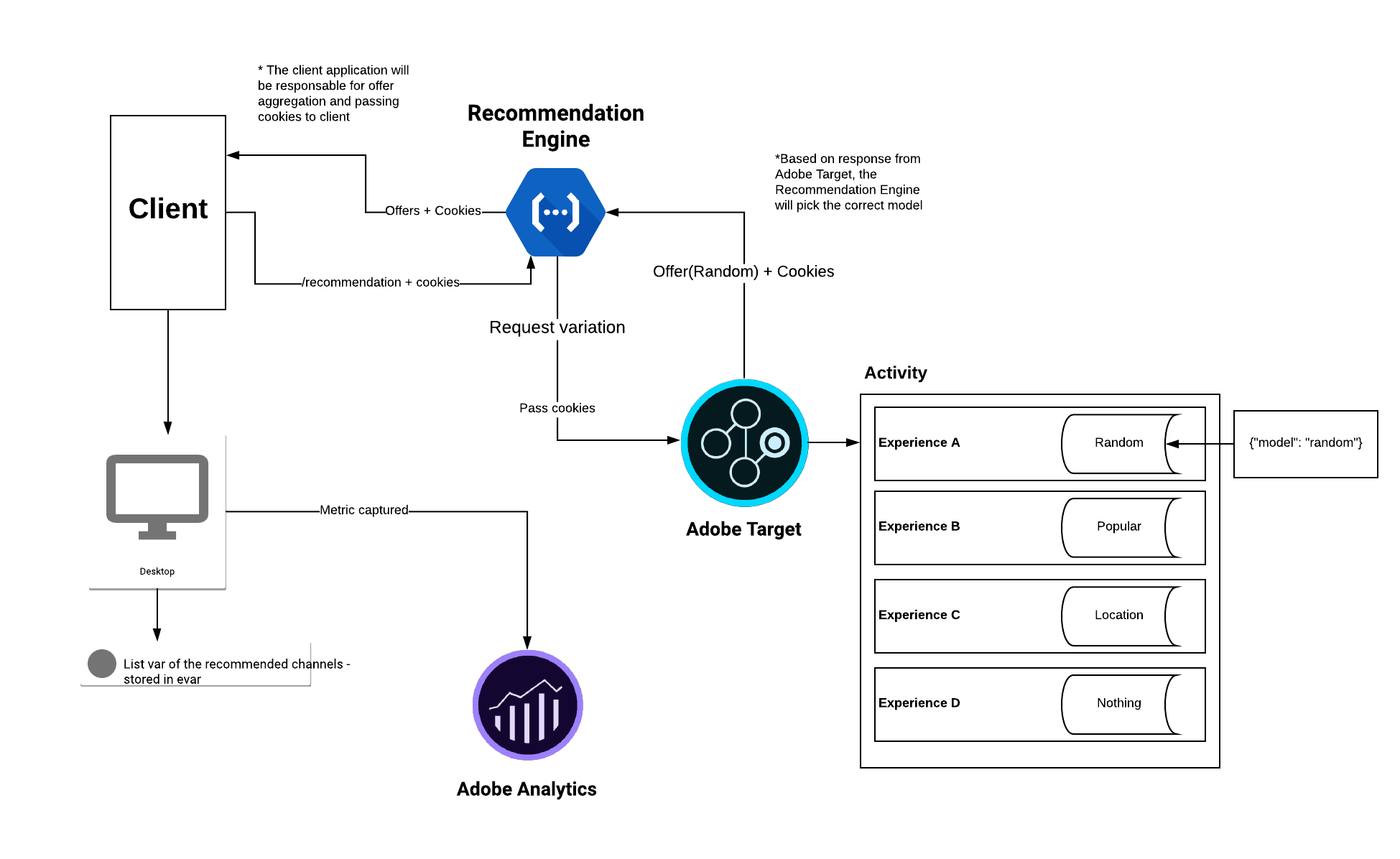

Finally, the sequence of events would be as follows:

- The client application requests a prediction from the Recommendation Engine API.

- The Recommendation Engine API calls Adobe Target passing the MCID cookie.

- Adobe Target evaluates the cookie and returns one of the four choices to the Recommendation Engine API along with its cookies.

- The Recommendation Engine API looks at the request and based on the payload it receives it chooses the appropriate model to use.

- The Recommendation Engine API returns the prediction to the client application along with cookies.

- The client application writes those cookies to the browser enabling Adobe Analytics to see that a target experience is being shown to the customer.

The solution allowed our systems to interact with each other while being decoupled from each other. As well, we were able to tune the amount of traffic each model received during the period of the test.

After the test period, we analyzed the results in Adobe Analytics and we saw that the popularity model performed the best among all other models. The integration was a great success and we plan to use it for future use cases.